I’ve been looking for inspiration, or a reason, to continue coding – last year was pretty rough for me between selling the house and getting rejected by 100+ places (again). To be fair, coding isn’t even close to being my forte but rather something I dabble in from time to time. Talking with a friend recently, I was reinspired to try again and tried jumping into the deep end… without floaties. I thought webcrawling would be easy and since I want to break into analytics, I figured what better way to try this than to crawl for mustang prices, compare them over the span of a month, and see where it goes! I have three major Ford dealerships nearby and this would provide some great data, right?

Nope.

Now, I could just manually key in the information every couple of days between the three dealerships, keeping track of stock numbers and such but honestly, putting a crawler together to track all this in a database made so much more sense! So instead of spending an hour or so looking at sites and jotting numbers down, the crawler goes through and finds all the mustang listings with prices, adds them to the database and viola! Done!

Now, to be fair, the original project worked fine, while only grabbing a handful of cars. I hadn’t considered websites being coded in javascript and had an API to draw from, etc. I figured it was just loaded into some local server that connects to the sites and the information is drawn from that server. Well, they are, in a sense, but to a remote server that is owned by a service company, and in turn, certain codes run in the background to pull it up – what I had created was an HTML based page reading where the data is imbedded directly into the html such as:

<div class="vehicle-card">

<h2 class="title">2019 Ford Mustang</h2>

<span class="primary-price">$32,995</span>

<div class="mileage">43,049 miles</div>

</div>

What essentially happened is that because the websites had additional coding and programming involved, my pulls were pretty poor in quality. I think, at most, I got 4 between four dealers (I used a sister dealership in the greater LR area for additional pulls). So, using chatgpt as a much more thorough technical mind, I went ahead and tried to figure out how to implement code that would pull the necessary information out to get to where I wanted to be, which was a charted path of price changes within a certain group of cars within the four-dealership area. We looked for API entries, keywords like inventory and mustang in the dev tools on my edge browser and thought that truly we had made some progress.

But what happens when you let even just a little time eclipse?

I waited about a week to get back into it again because sundays are pretty much my only days off where I can put any real attention to this and so, this morning, I got up and after lounging for a bit, went to work and I picked up where I thought I last left.

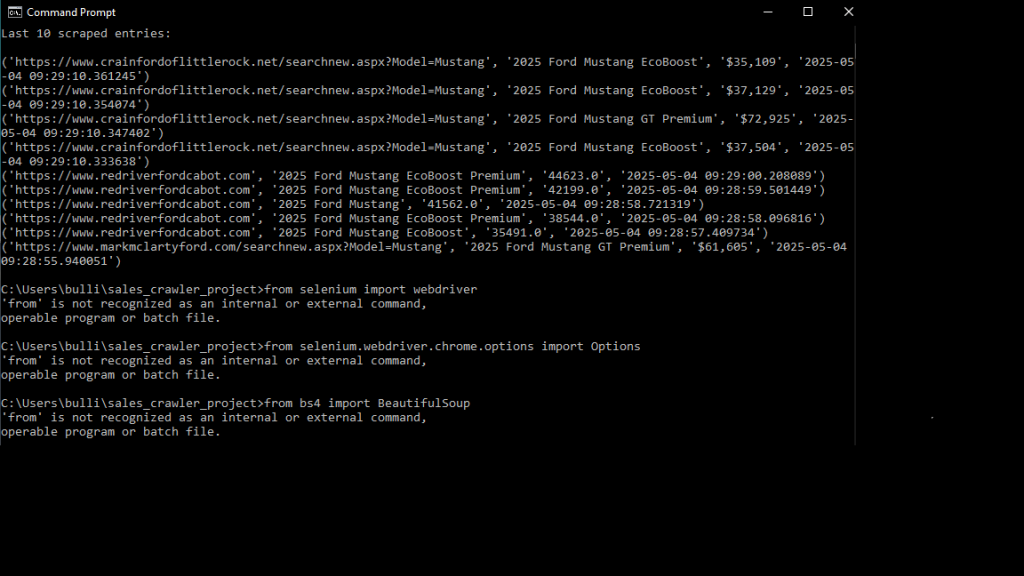

Now I get errors like this!

So.

I make a promise that I will not let projects wait for a week at a time. And…

Do something a little easier. ><